manual semiotic more info

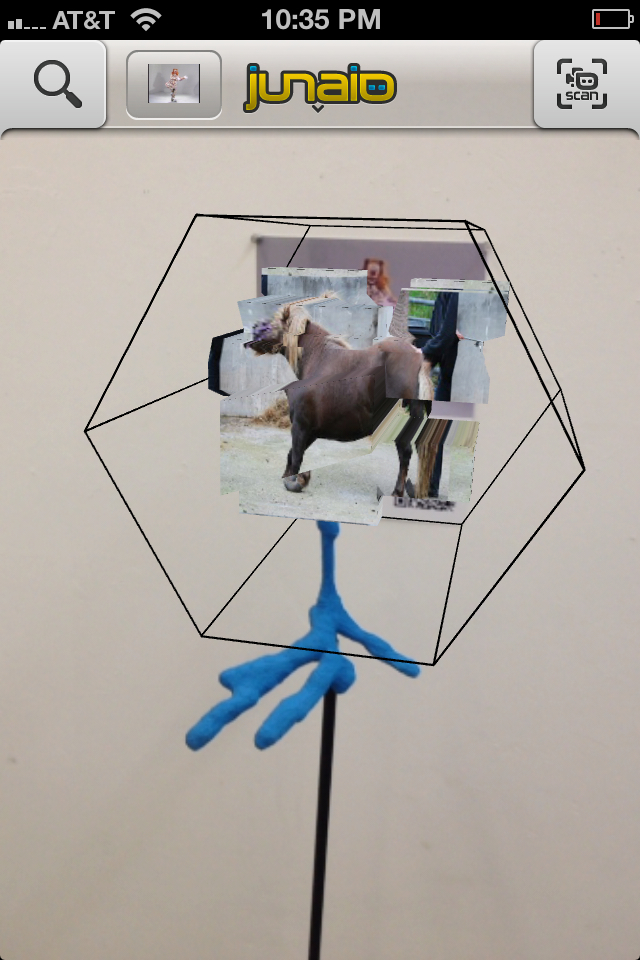

I experimented with Augmented Reality and I'm engaged the territory it is opening up. Augmented reality uses the phone as a viewer for extra information about what you're looking at through the phone's camera lens. You need things, or pictures or spaces to trigger that extra information. Triggers are typically QR Tags (like bar codes) or photographs but they can also be objects and rooms. Once triggered the phone shows other information-- a graphic, a photograph, a movie, or even a 3d model.

For my project I used images as a tag for 3d objects that had image texture maps on them. It gives an opportunity for a semiotic or language based use of images and objects. I say semiotic because at each level of experience, the next image or object can condition the previous one. Somehow this linearity creates a cognitive pause as one's perceptual apparatus catches up to the representation. the condition of sign and of language. You don't originally see the images as a single whole, rather they are loaded up in time and then again on an object. Rather you see them in time, one after the next. This serial viewing of images in which content is laid one over the next, one after the other, is intriguing as a way to deliver content which can be formal on the one hand and then carry some meaning or message on the other and is loaded in time.

The other strange part is the ability to mix images, virtual 3d objects, real 3d objects, movies, texture maps and so on.

This is not unlike a movie and there's some weird thing that happens as your eyes try to make sense of it all. It takes you into a new space or at least you struggle to synthesize your vision. It seems to be a way I can carry out my project, to explore objects that are deeply idiosyncratic and individuated. \

It could also be good to consider this interface as a way to image, project, and imagine a full sculpture. The texture mapped object can be printed with 3d imagery via mcor iris printers. Nice.